Cloud Equipment Market Will Grow From $110Bn in 2009 to $217Bn in 2014.

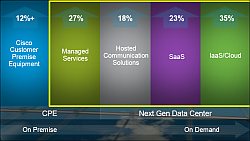

2009, according to a Cisco sponsored report by Forrester Research Inc, saw a significant uplift of sales of equipment into the cloud services sector despite the global recession. Figures show significantly greater growth in equipment sales that support next generation managed services as opposed to traditional Customer Premises Equipment.

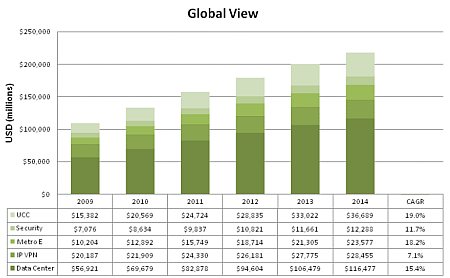

Their forecast for this market is that sales will grow from $110Bn in 2009 to $217Bn in 2014, a CAGR of 15%. It is all very exciting, I guess, unless that is you are stuck selling on premises equipment in which case you probably need to start thinking of career alternatives.

This information came from the Cisco Managed services seminar at the Tower of London last week. What struck me was the huge number of elements that make up the big cloud services picture. I counted 62 different technology areas that Cisco claim make up the whole market. These include areas such as Computing as a Service, Platform as a Service, Infrastructure as a Service and Software as a Service. The range is mind boggling.

This isn’t something that an ISP can undertake on a broad scale, at least not during the initial development stages of this market. You have to cherry pick your offerings.

Forrester have segmented the market into Unified Comms, Metro Ethernet, Security, Managed VPN (MPLS I assume) and Data Center . This may help. Timico plays in all these market segments to greater or lesser degrees which is somewhat reassuring.

In my mind you have to ignore the buzzwords and get on with satisfying what your customers need. In many cases customers will already have a good idea but there will be many more looking for guidance.

In my mind you have to ignore the buzzwords and get on with satisfying what your customers need. In many cases customers will already have a good idea but there will be many more looking for guidance.

The case for Virtualization, which is a big part of the infrastructure play when it comes to talking about managed services and the cloud, is very strong.

I looked at one specific example of a company that had 217 machines/servers occupying 9 racks. On average each server has 500GB of storage (an assumption on my part but a reasonable one) but a memory utilisation of only 30 – 40%. That’s a usage of only 43TB out of a total available of 108TB (plenty of rounding here).

If this server estate could be distilled onto a robust Storage Area Network that represents a huge potential cost saving, just taking disk space into consideration. More memory is saved because these systems typically recognise which operating systems are being used by the Virtual Machines and do not replicate multiple instances of such software.

What’s more aggregated processing power = better individual VM performance. In other words the processor capacity available to any single machine is far greater than it previously had access to on a single server. This inevitably results in performance efficiencies. The bandwidth story is the same. An individual stand alone server is likely to be served by a maximum of 1Gbps whereas a VM will probably get 10Gbps.

The example I looked at will result in 217 VMs on single 8U blade centre with a capacity 32 servers though we won’t need all 32 for this specific customer.

As Cisco has suggested the market is undergoing a big change right now. One that requires significant investment in infrastructure. I suspect that many familiar names will fail to make it through. It will be interesting to see who emerges into the clear skies beyond the cloud 🙂

Charts are courtesy of Cisco with Data from Forrester Research Inc.